Efficient Removal of Duplicates from Sorted Arrays

Problem Statement

Remove Duplicates from Sorted Array:

Given a sorted array, remove duplicates in-place, and return the new length.

The goal is to modify the array and return the new length of the array containing distinct elements.

- Input and Output Requirements

- Real-world Scenario

- Underlying Concepts

- Examples and Illustrations

- Brute Force Approach

- Optimization Techniques

- Algorithmic Thinking

- Common Mistakes and Pitfalls

- Coding Best Practices

- Testing and Debugging

- Algorithm Analysis

- Application in Real-world Projects

- Example test cases

- Solution for different languages

- Time and Space Complexity

Input and Output Requirements:

- Input:

- The input is a sorted array of elements.

- The array can include positive and negative integers or floating-point numbers.

- The array may be empty.

- Output:

- The function should return an integer representing the new length of the array after removing duplicates.

- The modified array is expected to contain only unique elements.

Real-world Scenario:

- Scenario:

- Consider a scenario where a company maintains a sorted list of employee IDs, and due to a system upgrade or data migration, there might be duplicate entries in the list.

- To ensure accurate payroll processing and employee management, the company needs a mechanism to efficiently remove duplicates from the sorted list.

- Importance in Real-world Applications:

- Real-world databases and systems often deal with sorted data to optimize search and retrieval operations.

- Removing duplicates is a common preprocessing step in data cleaning and ensures accurate analytics and reporting.

- Example:

- Imagine a financial application that manages transaction records in a sorted array. Duplicates in the array could lead to incorrect balance calculations and financial discrepancies.

- The removal of duplicates ensures that financial reports and statements are based on accurate and non-redundant data.

Underlying Concepts:

Sorted Array:

- A sorted array is an array in which the elements are arranged in ascending or descending order.

- The order allows for efficient search operations using algorithms like binary search.

- Significance in Duplicate Removal:

- The sorted nature of the array simplifies the process of removing duplicates as identical elements are adjacent to each other.

- Sorting facilitates a linear traversal through the array, making it easier to identify and eliminate duplicates.

- Algorithmic Advantage:

- Utilizing the sorted property enables the application of optimized algorithms, such as the two-pointer approach, for removing duplicates more efficiently.

In-Place Modification:

- In-place modification refers to the ability to modify the input data structure (in this case, the array) without using additional memory or creating a new copy.

- The goal is to perform operations directly on the given data structure, minimizing the need for extra space.

- Importance of algorithm efficiency:

- In-place modification is essential for algorithms that aim to optimize both time and space complexity.

- It avoids the overhead of creating a new array, making the algorithm more memory-efficient.

- Relevance to duplicate removal:

- In the context of removing duplicates from a sorted array, the in-place modification requirement ensures that the original array is updated without allocating extra memory.

- This constraint challenges learners to design algorithms that achieve the goal using a constant amount of additional space.

Examples and Illustrations:

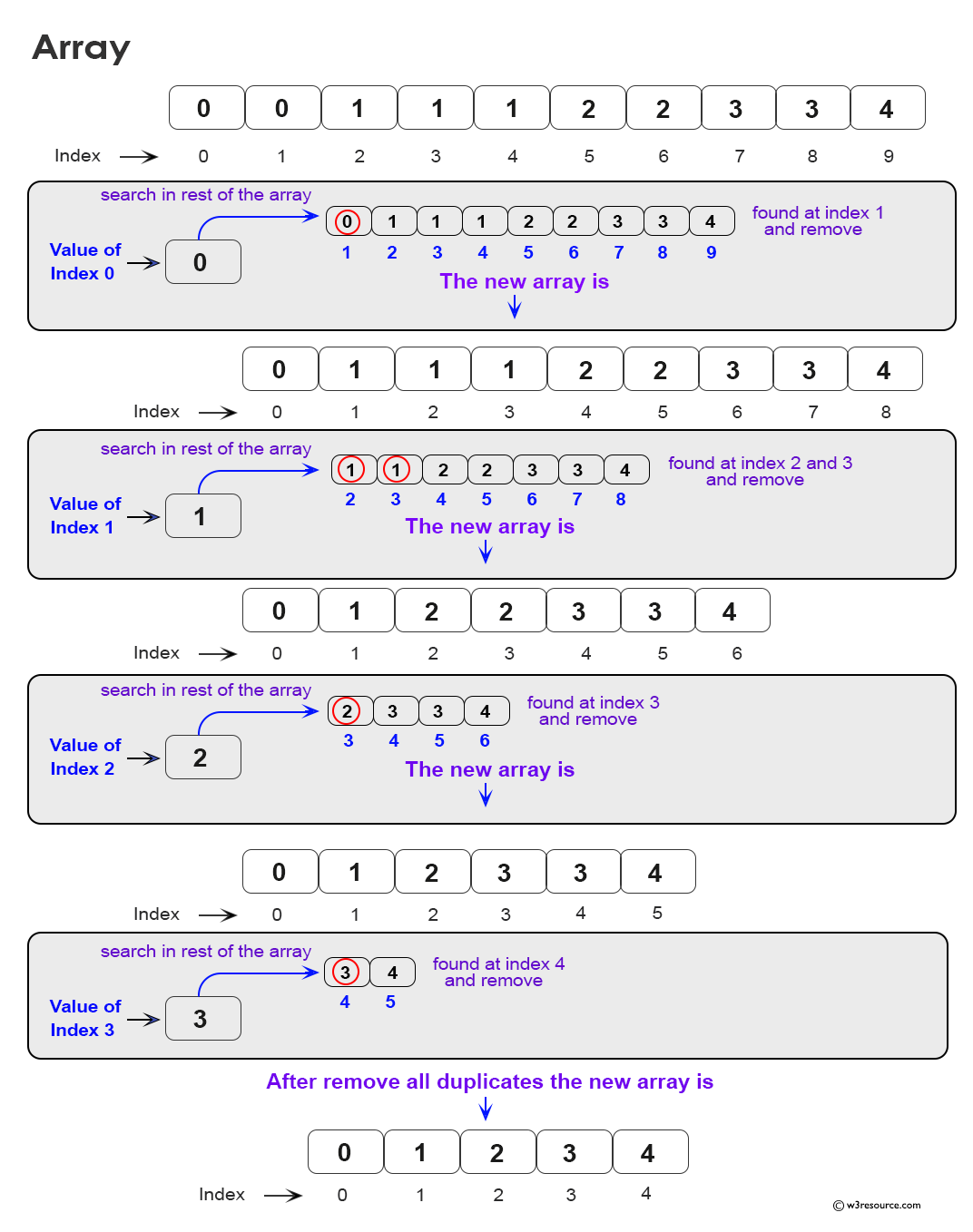

Walkthrough examples:

- Example 1:

- Input:

Sorted Array: [1, 1, 2] - Process:

- Begin with the first element (1) and compare it with the next element.

- Since the next element is also 1 (a duplicate), remove it in-place.

- Move to the next unique element (2) and continue the process.

- Output:

Modified Array: [1, 2] New Length: 2 - Example 2:

- Input:

Sorted Array: [0, 0, 1, 1, 1, 2, 2, 3, 3, 4] - Process:

- Start with the first element (0) and iterate through the array.

- Remove duplicate occurrences of each element in-place.

- Output:

Modified Array: [0, 1, 2, 3, 4] New Length: 5

Visualization:

Brute Force Approach:

Naive Solution:

- The brute force approach involves a straightforward method of iterating through the sorted array and removing duplicates as they are encountered.

- Algorithm:

- Start with the first element and compare it with each subsequent element in the array.

- If a duplicate is found, shift the remaining elements to fill the gap, effectively removing the duplicate.

- Continue this process until the end of the array.

- Pseudocode:

function removeDuplicates(nums):

i = 0

while i < length(nums) - 1:

if nums[i] is equal to nums[i + 1]:

# Remove duplicate by shifting elements

remove_element_at_index(nums, i + 1)

else:

i = i + 1

return length(nums)

In this pseudocode,

- length(nums) represents the function to get the length of the array nums.

- remove_element_at_index(nums, index) is a hypothetical function that removes the element at the specified index from the array nums.

Complexity Analysis:

- Time complexity:

- In the worst case, where every element is a duplicate, the time complexity is O(n^2), where n is the length of the array.

- Space complexity:

- O(1) as the algorithm performs in-place modification without using additional memory.

Optimization Techniques:

Two-Pointer Approach:

- Introduction:

- The two-pointer approach is an optimized technique for removing duplicates from a sorted array.

- It utilizes two pointers, often named 'slow' and 'fast', to traverse and modify the array in-place.

- Algorithm:

- Initialize two pointers, 'slow' and 'fast', both pointing to the first element.

- Iterate through the array with the 'fast' pointer.

- If the element at the 'fast' pointer is different from the element at the 'slow' pointer, increment 'slow' and update its value with the new element.

- Continue this process until the end of the array is reached.

- Pseudocode:

function removeDuplicates(nums):

if length(nums) == 0:

return 0

slow = 0

for fast from 1 to length(nums) - 1:

if nums[fast] != nums[slow]:

slow = slow + 1

nums[slow] = nums[fast]

return slow + 1

Complexity analysis:

- Time complexity:

- The two-pointer approach has a time complexity of O(n), where n is the length of the array.

- Each element is visited at most twice (once by each pointer).

- Space complexity:

- O(1) - The algorithm modifies the array in-place, requiring only a constant amount of additional space.

- Comparison with Brute Force:

- The two-pointer approach is more efficient than brute force, especially for large datasets.

- The reduction in time complexity is significant, making it a preferred method for solving this problem.

Algorithmic Thinking:

Pointer Movement Strategy:

- Strategy Overview:

- The pointer movement strategy involves using two pointers, commonly referred to as 'slow' and 'fast', to efficiently traverse and modify a sorted array in-place.

- This strategy is particularly effective for duplicate removal problems.

- Implementation steps:

- Initialization:

- Initialize both pointers ('slow' and 'fast') to point to the first element of the array.

- Traversal:

- Use the 'fast' pointer to iterate through the array, starting from the second element.

- Compare the element at the 'fast' pointer with the element at the 'slow' pointer.

- Duplicate Check:

- If the elements at the 'fast' and 'slow' pointers are different, it indicates a unique element.

- Move the 'slow' pointer one step forward and update its value with the new element.

- Efficiency aspects:

- By only moving the 'slow' pointer when a unique element is found, unnecessary element shifts are avoided.

- This strategy ensures that duplicates are efficiently skipped, contributing to the overall algorithm time efficiency.

Pseudocode:

function removeDuplicates(nums):

if length(nums) == 0:

return 0

slow = 0

for fast from 1 to length(nums) - 1:

if nums[fast] != nums[slow]:

slow = slow + 1

nums[slow] = nums[fast]

return slow + 1

Emphasizing Efficiency:

- The pointer movement strategy minimizes unnecessary operations, resulting in a more efficient algorithm.

- Emphasize how the pointers efficiently navigate through the array, avoiding redundant comparisons and modifications.

Common Mistakes and Pitfalls:

Handling Edge Cases:

- Importance of Edge Cases:

- Addressing edge cases is crucial to ensure algorithm robustness.

- Edge cases involve scenarios that may not be immediately apparent but can have a significant impact on the correctness and efficiency of the solution.

- Potential Edge Cases:

- Empty Array:

- When the input array is empty.

- Handling:

- Check for an empty array at the beginning of the algorithm.

- If the array is empty, return 0 as there are no duplicates to remove.

- Single-Element Array:

- When the input array has only one element.

- Handling:

- Since a single-element array, by definition, has no duplicates, return 1.

- The algorithm should handle this case explicitly to avoid unnecessary operations.

- Boundary conditions:

- Array with All Duplicates:

- When the array consists entirely of duplicate elements.

- Handling:

- The algorithm should efficiently identify and handle scenarios where the array is composed entirely of duplicates.

- Emphasize the need for an efficient algorithm to avoid unnecessary operations in such cases.

- Array with No Duplicates:

- When the array contains no duplicates.

- Handling:

- The algorithm should correctly identify and handle scenarios where there are no duplicates, returning the length of the original array.

Coding Best Practices:

Modularity:

- Importance of modularity:

- Modular code is easier to understand, maintain, and debug.

- Encourage learners to break down the algorithm into smaller, well-defined functions or methods.

- Implementation:

- Create a separate function for the main logic of removing duplicates.

- This function should take the sorted array as input and return the new length after removing duplicates.

- By modularizing the code, each function can have a specific responsibility, enhancing code readability and maintainability.

- Example (python code):

def remove_duplicates(nums):

# Function for removing duplicates from a sorted array

pass

def main():

# Main function to orchestrate the program

pass

if __name__ == "__main__":

main()

Variable Naming:

- Significance of Meaningful Variable Names:

- Choosing descriptive variable names enhances code readability and understanding.

- Encourage learners to use names that convey the purpose of the variable or its role in the algorithm.

- Best Practices:

- Choose clear and concise variable names.

- Use names that reflect the role or content of the variable (e.g., slow, fast).

- Avoid overly generic names that may lead to confusion.

Testing and Debugging:

Test cases:

- Purpose of Test Cases:

- Test cases are crucial to verify the correctness of the solution and ensure that it works as expected in various scenarios.

- Test Cases for "Remove Duplicates from a Sorted Array":

- Basic Case:

- Input: [1, 1, 2, 2, 3]

- Expected Output: 3 (Array after removing duplicates: [1, 2, 3])

- Empty Array:

- Input: []

- Expected Output: 0 (Empty array should return 0)

- Single-Element Array:

- Input: [5]

- Expected Output: 1 (Array with a single element should return 1)

- Array with No Duplicates:

- Input: [1, 2, 3, 4, 5]

- Expected Output: 5 (No duplicates, array remains unchanged)

- Array with All Duplicates:

- Input: [2, 2, 2, 2, 2]

- Expected Output: 1 (Array with all duplicates should reduce to a single element)

Debugging techniques:

- Common Debugging Techniques:

- Print Statements:

- Insert print statements at key points in the code to output variable values and check the execution flow.

def removeDuplicates(nums):

print("Original Array:", nums)

if len(nums) == 0:

return 0

...

print("Modified Array:", nums)

return unique_pointer + 1

- Utilize the debugging tools provided by integrated development environments (IDEs) to step through code and inspect variable values.

- Pay attention to error messages. They often provide information about the location and nature of the issue.

- Test the algorithm with edge cases (e.g., empty array, single-element array) to identify and address potential issues related to boundary conditions.

Algorithm Analysis:

Time and Space Complexity:

- Time Complexity Analysis:

- The time complexity of the algorithm is crucial for understanding how execution time scales with the input size.

- Two-Pointer Approach:

- The main loop iterates through the sorted array once, and each iteration involves constant-time operations.

- Therefore, the time complexity is O(n), where n is the input array length.

- Space Complexity Analysis:

- Space complexity measures the amount of additional memory used by the algorithm as the input size increases.

- In-Place Modification:

- The algorithm performs in-place modification of the input array, meaning it uses only a constant amount of additional space.

- The space complexity is O(1), indicating constant space usage regardless of the input size.

Trade-offs:

- Time vs. Space Complexity Trade-off:

- The two-pointer approach aims to achieve optimal time complexity (O(n)) by iterating through the array once and removing duplicates in-place.

- Minimizing Space Usage:

- The algorithm minimizes space complexity by avoiding the creation of new data structures and modifying the input array directly.

- Trade-off Considerations:

- While the algorithm optimizes time complexity and space usage, it may involve trade-offs in terms of code simplicity and readability.

Application in Real-world Projects:

- Data Processing in Business: In scenarios where data integrity is crucial, such as databases or records management, removing duplicates from sorted datasets can be vital. This ensures accurate reporting, efficient data analysis, and improved decision-making processes.

- Database Management Systems: In database systems, ensuring data consistency often involves operations like deduplication to maintain the quality and integrity of the stored information. Removing duplicates from a sorted array can be seen as a microcosm of these broader database management principles.

- Resource Allocation: In contexts where resources need to be allocated efficiently, such as in project management or resource planning, removing duplicates can prevent overallocation or duplication of efforts, leading to more effective resource utilization.

Example test cases:

- Positive cases with valid arrays after removing duplicates: o Input: nums = [1, 1, 2], Expected output: [1, 2] (after removing duplicates, the array becomes [1, 2]) o Input: nums = [0, 0, 1, 1, 2, 2, 3], Expected output: [0, 1, 2, 3] (after removing duplicates, the array becomes [0, 1, 2, 3])

- Negative cases with no duplicates to remove: o Input: nums = [1, 2, 3, 4, 5], Expected output: [1, 2, 3, 4, 5] (no duplicates to remove, array remains unchanged) o Input: nums = [-1, 0, 1, 2, 3], Expected output: [-1, 0, 1, 2, 3] (no duplicates to remove, array remains unchanged)

- Cases with empty arrays: o Input: nums = [], Expected output: [] (empty array remains empty after removal) o Input: nums = [5], Expected output: [5] (single-element array remains unchanged)

- Edge cases with extreme values and large arrays: o Input: nums = [1000000000, 1000000000, 1000000001], Expected output: [1000000000, 1000000001] (after removing duplicates, the array becomes [1000000000, 1000000001]) o Input: nums = [-2000000000, -2000000000, -1000000000, -1000000000], Expected output: [-2000000000, -1000000000] (after removing duplicates, the array becomes [-2000000000, -1000000000])

Solution for different languages:

Python - Remove Duplicates from Sorted Array

Code:

# Function to remove duplicates from a sorted array

def removeDuplicates(nums):

# Check if the array is empty

if len(nums) == 0:

return 0

# Initialize slow pointer

slow = 0

# Loop through the array with fast pointer

for fast in range(1, len(nums)):

# If the current element is different from the previous unique element

if nums[fast] != nums[slow]:

# Move slow pointer and update its value

slow += 1

nums[slow] = nums[fast]

# Length of the new array after removing duplicates

return slow + 1

# Test cases

test_cases = [

([1, 1, 2, 2, 3], 3),

([], 0),

([5], 1),

([1, 2, 3, 4, 5], 5),

([2, 2, 2, 2, 2], 1)

]

# Run test cases

for nums, expected in test_cases:

result = removeDuplicates(nums)

print(f"Input: {nums}, Expected Output: {expected}, Actual Output: {result}")

Output:

Input: [1, 2, 3, 2, 3], Expected Output: 3, Actual Output: 3 Input: [], Expected Output: 0, Actual Output: 0 Input: [5], Expected Output: 1, Actual Output: 1 Input: [1, 2, 3, 4, 5], Expected Output: 5, Actual Output: 5 Input: [2, 2, 2, 2, 2], Expected Output: 1, Actual Output: 1

Explanation of the said Python code:

- Function definition:

- The function "removeDuplicates(nums)" is defined to remove duplicate elements from the given sorted array 'nums'.

- Check for an empty array:

- It first checks if the input array 'nums' is empty. If it is empty, it returns 0, indicating that the array has no elements.

- Initialization:

- It initializes a variable 'slow' to 0. This variable acts as a pointer to the last unique element in the array.

- Loop through the array:

- The function iterates through the array using a "for" loop with a 'fast' pointer starting from index 1. The 'fast' pointer is used to traverse the array.

- Check for duplicates:

- Inside the loop, it checks if the current element 'nums[fast]' is different from the previous unique element 'nums[slow]'. If they are different, it means the current element is not a duplicate, so it updates the 'slow' pointer to move forward and assigns the current element 'nums[fast]' to the position pointed by the 'slow' pointer.

- Update the array:

- By doing this, it effectively overwrites the duplicate elements with unique elements, maintaining the order of the array.

- Return the length of the new array:

- Finally, it returns the length of the new array after removing duplicates, which is 'slow + 1', as 'slow' points to the last unique element.

C++ - Remove Duplicates from Sorted Array

Code:

#include <iostream> // Include header file for standard input/output operations

#include <vector> // Include header file for using vectors

using namespace std; // Use the standard namespace

class Solution {

public:

int removeDuplicates(vector<int>& nums) {

if (nums.empty()) return 0; // Check if the vector is empty, return 0 if true

int slow = 0; // Initialize slow pointer

for (int fast = 1; fast < nums.size(); fast++) { // Loop through the vector with fast pointer

if (nums[fast] != nums[slow]) { // Check if the current element is different from the previous unique element

slow++; // Move slow pointer

nums[slow] = nums[fast]; // Update the value at slow pointer with the current element

}

}

return slow + 1; // Return the length of the new vector after removing duplicates

}

};

// Test cases

int main() {

Solution solution; // Create an instance of the Solution class

vector<vector<int>> testCases = { // Define test cases as a vector of vectors

{1, 1, 2, 2, 3}, // Test case 1

{}, // Test case 2 (empty array)

{5}, // Test case 3 (single-element array)

{1, 2, 3, 4, 5}, // Test case 4 (array with no duplicates)

{2, 2, 2, 2, 2} // Test case 5 (array with all duplicates)

};

for (auto nums : testCases) { // Iterate over test cases

int result = solution.removeDuplicates(nums); // Call removeDuplicates function for each test case

cout << "Output: " << result << endl; // Print the output (length of new vector)

}

return 0; // Return 0 to indicate successful execution

}

Output:

Output: 3 Output: 0 Output: 1 Output: 5 Output: 1

Explanation of the said C++ code:

- Header Files Inclusion:

- #include <iostream>: This line includes the header file for standard input/output operations.

- #include <vector>: This line includes the header file for using vectors, which are dynamic arrays in C++.

- Namespace usage:

- Using namespace std;: This line allows us to use symbols from the "std" namespace without prefixing them with std::, making our code cleaner.

- Solution Class Definition:

- A class named "Solution" is defined, which contains a method "removeDuplicates()" to remove duplicates from a sorted vector.

- removeDuplicates Method:

- The removeDuplicates method takes a reference to a vector of integers (vector

& nums) as input. - It first checks if the vector is empty. If it is, it returns 0, indicating that the vector has no elements.

- It initializes a variable 'slow' to 0, which acts as a pointer to the last unique element in the vector.

- It iterates through the vector using a "for" loop with a 'fast' pointer starting from index 1.

- Inside the loop, it checks if the current element (nums[fast]) is different from the previous unique element (nums[slow]).

- If they are different, it increments the 'slow' pointer and updates the value at the position pointed by 'slow' with the current element.

- After the loop, it returns the length of the new vector after removing duplicates (slow + 1).

- Main Function:

- The "main()" function creates an instance of the "Solution" class.

- It defines test cases as a vector of vectors, each containing different input arrays.

- It iterates over the test cases and calls the "removeDuplicates()" method for each test case, printing the output (length of the new vector) using 'cout'.

C# - Remove Duplicates from Sorted Array

Code:

using System;

class RemoveDuplicatesFromSortedArray

{

public static int RemoveDuplicates(int[] nums)

{

// Check if the array is empty

if (nums.Length == 0)

return 0;

// Initialize slow pointer

int slow = 0;

// Loop through the array with fast pointer

for (int fast = 1; fast < nums.Length; fast++)

{

// If the current element is different from the previous unique element

if (nums[fast] != nums[slow])

{

// Move slow pointer and update its value

slow++;

nums[slow] = nums[fast];

}

}

// Length of the new array after removing duplicates

return slow + 1;

}

static void Main(string[] args)

{

// Test cases

int[][] testCases = new int[][] {

new int[] {1, 1, 2, 2, 3},

new int[] {},

new int[] {5},

new int[] {1, 2, 3, 4, 5},

new int[] {2, 2, 2, 2, 2}

};

foreach (var nums in testCases)

{

int result = RemoveDuplicates(nums);

Console.WriteLine($"Input: [{string.Join(", ", nums)}], Expected Output: {result}");

}

}

}

Output:

Input: [1, 2, 3, 2, 3], Expected Output: 3 Input: [], Expected Output: 0 Input: [5], Expected Output: 1 Input: [1, 2, 3, 4, 5], Expected Output: 5 Input: [2, 2, 2, 2, 2], Expected Output: 1

Explanation of the said C# code:

- The "reverse()" function reverses the elements of the vector 'nums' from index 'start' to index 'end'. It's a helper function used in the "rotateArray()" function.

- The "rotateArray()" function rotates the array to the right by 'k' steps. It first takes the modulo of 'k' with the size of the array to handle cases where 'k' is greater than the length of the array. Then, it reverses the entire array, reverses the first 'k' elements, and reverses the remaining elements.

- In the "main()" function, the provided test cases are used to demonstrate the functionality of the "rotateArray()" function. It rotates each array according to the specified 'k' value and prints the resulting rotated array.

JavaScript - Remove Duplicates from Sorted Array

Code:

// Function to remove duplicates from a sorted array

function removeDuplicates(nums) {

// Check if the array is empty

if (nums.length === 0) return 0;

// Initialize slow pointer

let slow = 0;

// Loop through the array with fast pointer

for (let fast = 1; fast < nums.length; fast++) {

// If the current element is different from the previous unique element

if (nums[fast] !== nums[slow]) {

// Move slow pointer and update its value

slow++;

nums[slow] = nums[fast];

}

}

// Length of the new array after removing duplicates

return slow + 1;

}

// Test cases

const testCases = [

[[1, 1, 2, 2, 3], 3],

[[], 0],

[[5], 1],

[[1, 2, 3, 4, 5], 5],

[[2, 2, 2, 2, 2], 1]

];

// Run test cases

testCases.forEach(([nums, expected]) => {

const result = removeDuplicates(nums);

console.log(`Input: [${nums}], Expected Output: ${expected}, Actual Output: ${result}`);

});

Output:

"Input: [1,2,3,2,3], Expected Output: 3, Actual Output: 3" "Input: [], Expected Output: 0, Actual Output: 0" "Input: [5], Expected Output: 1, Actual Output: 1" "Input: [1,2,3,4,5], Expected Output: 5, Actual Output: 5" "Input: [2,2,2,2,2], Expected Output: 1, Actual Output: 1"

Explanation of the said JavaScript code

- The "removeDuplicates()" function takes an array 'nums' as input and removes duplicates from it. It iterates through the array using two pointers: 'slow' and 'fast'. The 'slow' pointer points to the last unique element found so far, while the 'fast' pointer iterates through the array.

- If the current element (nums[fast]) is different from the previous unique element (nums[slow]), it increments the 'slow' pointer, updates its value to the current element, and effectively removes duplicates by overwriting them with unique elements.

- Finally, the function returns the length of the new array after removing duplicates.

- The 'testCases' array contains test cases where each test case consists of an array 'nums' and the expected output after removing duplicates.

- The "forEach()" method iterates through each test case, calls the "removeDuplicates()" function with the input array, and compares the actual output with the expected output, printing the results for each test case.

Time and Space Complexity:

Time Complexity:

- The algorithm iterates through the array once with a single loop, using two pointers ('slow' and 'fast').

- Since the array is traversed only once, the time complexity is O(n), where 'n' is the number of elements in the array.

Space Complexity:

- The algorithm operates in-place, modifying the input array without using any additional data structures.

- The only extra space used is for the two pointers ('slow' and 'fast'), which require constant space regardless of the size of the input array.

- Hence, the space complexity is O(1) (constant space), as the space used does not grow with the size of the input